How to Read a Scientific Study Without Getting Played

Seven Pro-Tips When Reading Scientific Studies for the Non-Scientist and Citizen Scientists.

Science is a process, not a gospel.

A published study isn’t a stone tablet of truth—it’s a conversation starter.

It’s a piece of evidence that might be flawed, biased, or even flat-out wrong. Yet, many people treat studies like sacred texts. Bad science can be used to sell you garbage, justify harmful policies, or reinforce old prejudices.

Result? You will sound like a misinformed jackass. Or worse, you base a moral standing or policies on highly problematic and/or harmful, incorrect science.

So, let’s talk about how to spot the BS and cut through the nonsense.

Step One: Who Paid for It?

If Big Tobacco funds a study on lung cancer, are you trusting it? What about a pharmaceutical company sponsoring research on its own drug? Or worse, promoting diagnoses that benefit the purchase of their drugs? (See future article on problems with the DSM-5). Funding sources matter. Money doesn’t just influence results—it can shape the entire study design, from the questions asked to the data that gets buried. Always check the conflict of interest section.

Step Two: How Big Was the Study?

(a.k.a. Checking the sample size of the population as well as the demographic details within in it)

A study of 12 people doesn’t mean much. The bigger the sample size, the more reliable the results. But even large studies can be misleading if they don’t represent the real world. Did they study 10,000 people? Cool. But if all 10,000 were upper-middle-class white men from one city, those findings might not apply to anyone else.

The sample should be representative of the target population studied.

Medical history shows us that this wasn’t always the case. Traditionally, human reference was selected to be a 70 kilogram white male. Different bodies, larger, smaller, different ethnicities, even women, were considered derivations of this standard. This logic has had serious implications on the human experience from medical to pharmaceutical to engineering.

Step Three: How valid is this research to real-world conditions?

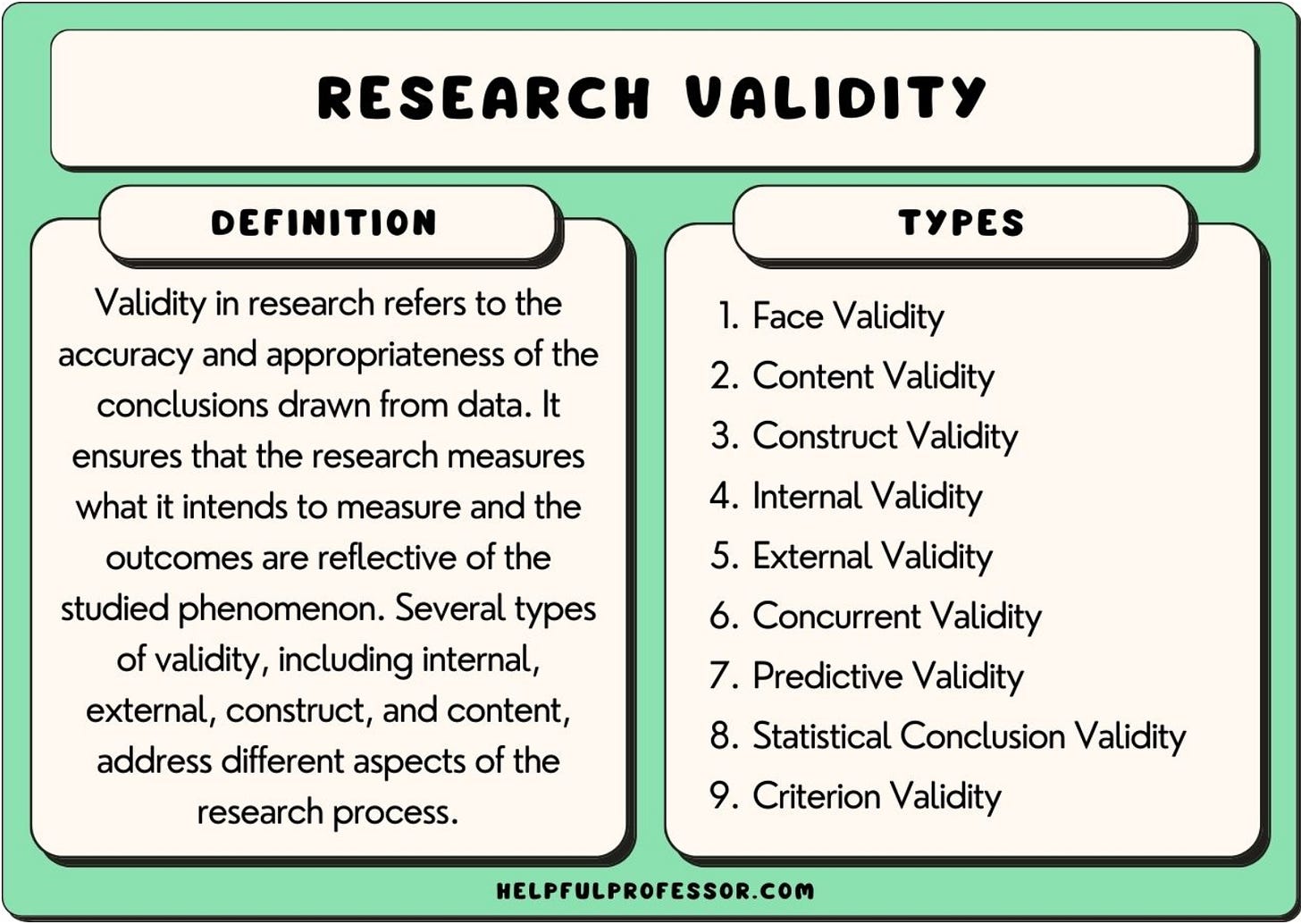

Validity ensures a study actually measures what it claims to. While I’ve seen up to 9 types of validity, however, for starters, there are two key types to watch:

(Image Source: HelpfulProfessor.com, Cornell, 2024)

• Internal Validity: Does the study design properly test what it’s supposed to?

Were there confounding factors? Confounding factors are like hidden troublemakers that can mix up results in a study. Imagine you’re trying to see if eating more ice cream makes people happier. But at the same time, it’s summer, and warm weather also makes people happier. If you don’t account for the heat, you might mistakenly think ice cream alone is causing happiness when really, the weather plays a big role too.

Was the methodology strong? Compare the strength of methodology with the quality of instructions for a recipe when baking a cake. If the recipe is clear, uses the right ingredients, and follows good steps, you’ll get a great cake every time.

But if the instructions are messy, the wrong ingredients are used, or steps are skipped, the cake might not turn out right. This influences the ability for other scientists to replicate this research to get the same results. Any cook or baker who has noticed what happens when you boil water or attempt to bake bread at higher altitudes compared to lower altitudes knows exactly how frustrating it could be to follow an unadapted recipe.

In research, a strong methodology means the study is well-planned, follows good steps, and avoids mistakes, so the results are trustworthy and easily replicated.

If the method is weak, the results might be wrong or misleading, like a cake that falls apart.

• External Validity: Can these findings apply outside of a controlled lab setting? Something that works in a perfect, sterile environment might fail in the messy, unpredictable real world (Ioannidis, 2005).

Ask Yourself: Does this study hold up in the real world, with other populations?

In another part of the world? Or just in perfect or limited conditions?

to whether or not a test or an experiment is actually doing what it is intended to do.

Step Four: What Are They Really Measuring?

Science loves numbers, but not all numbers mean something. They may tell you what, but not how or why. Some studies mix up correlation (two things happening together) with causation (one thing causing the other).

Take the Ice Cream and Polio Panic as an example.

Back in the early 20th century, polio cases spiked during the summer. At the same time, ice cream sales also went up. Some people panicked and thought eating ice cream might cause polio. But the real reason polio surged wasn’t frozen desserts—it was because kids were in closer contact in the summer, spreading the virus.

Ice cream just happened to be a seasonal trend that lined up with polio outbreaks.

If a study claims one thing causes another, ask: Is there another explanation?

Step Five: Is Race Being Used Like It’s Biology?

Race is not biological—it’s a social construct. (Meaning: People made it up to classify people.) Yet, medical research often acts like “Black” or “White” is a genetic trait. They are not.

This is where Systems Thinking comes in.

In healthcare, "systems thinking" refers to a holistic approach that views the healthcare system as a network of interconnected parts, where changes in one area can impact other areas, and focuses on understanding how these components interact to influence patient outcomes, rather than just addressing individual problems in isolation; essentially, it's about looking at the whole picture (social, environmental, historical exposures, etc.) to identify and solve complex health issues by considering all relevant factors within the system.

Instead of looking at systemic factors (poverty, discrimination, access to healthcare, history of oppression), studies often blame racial groups for their own health outcomes.

If a study talks about race but ignores structural inequality (look up: Social Determinants of Health), be suspicious.

Step Six: Can Anyone Else Replicate It?

One study doesn’t prove anything.

Scientific claims get stronger when other researchers can repeat the experiment and get the same results. This is one of the main reasons for publishing findings: replication. Look at the year the study was performed and find more studies before and after that date.

If no one else has been able to replicate the findings, it might be junk science.

At best, it’s a preliminary study - primed for further research.

Step Seven: What’s the Agenda?

Not all research is independent.

Corporations, government agencies, and interest groups fund studies, and their money often influences results. Take the sugar industry, which funded research in the 1960s blaming fat for heart disease while downplaying sugar’s role (Kearns et al., 2016). The tobacco industry pulled similar tricks, funding studies that “proved” cigarettes weren’t harmful (Proctor, 2012).

Some research exists just to push a narrative. If a study claims that climate change isn’t real, but it’s backed by an oil company, you already know what’s up. Always ask: Who benefits if this study is widely believed?

A great example of industry-funded deception comes from the sugar industry.

In the 1960s, the Sugar Research Foundation paid Harvard scientists to downplay sugar’s link to heart disease and shift the blame onto dietary fat. Their research, published in the New England Journal of Medicine, shaped decades of dietary recommendations that demonized fat while letting sugar-heavy foods off the hook (Kearns et al., 2016). The result? A public health disaster, with skyrocketing rates of obesity, diabetes, and heart disease.

A few decades later, the corn syrup industry pulled a similar trick. As concerns grew over high-fructose corn syrup (HFCS), the Corn Refiners Association funded studies claiming HFCS was no different from regular sugar—despite independent research suggesting it could contribute more to obesity and metabolic disorders (White, 2008). They even tried to rebrand HFCS as “corn sugar” to make it sound healthier.

The sugar industry also attacked stevia, a natural zero-calorie sweetener.

In 1991, the FDA banned stevia from being marketed as a sweetener, citing “safety concerns.” Who benefited? The sugar industry and artificial sweetener manufacturers like Monsanto (which owned NutraSweet at the time).

Stevia had been used safely in South America for centuries, and Japan had already been using it without issues since the 1970s (Kinghorn, 2002).

For nearly two decades, stevia was only allowed in the U.S. as a “dietary supplement”—meaning it couldn’t be marketed as a sugar alternative. But once big corporations like Coca-Cola and Cargill patented their own stevia extracts, the FDA suddenly approved stevia as “Generally Recognized as Safe” (GRAS) in 2008. The catch? Only the corporate-controlled, patented versions (Lemus-Mondaca et al., 2012).

The tobacco industry perfected this strategy first, using industry-funded science to cast doubt on smoking’s link to lung cancer for decades (Proctor, 2012).

The playbook is always the same: fund studies that muddy the waters, create confusion, and keep profits rolling in.

Final Takeaway: Stay Skeptical, Be Objective, Stay Curious

Science is a tool - not a religion. It’s powerful, but it’s also messy.

Knowing how to question research makes you harder to manipulate.

Whether it’s a news article claiming that coffee cures cancer or a politician promoting government policy based on shady statistics, always ask: Does this make sense?

And remember—real scientists love questions and talking about their research.

If someone tells you to stop questioning the data or is vague on the details,

that’s a red flag.

_________________________________________________________________________

Works Cited:

Bray, G. A., Nielsen, S. J., & Popkin, B. M. (2004). Consumption of high-fructose corn syrup in beverages may play a role in the epidemic of obesity. The American Journal of Clinical Nutrition, 79(4), 537–543. https://doi.org/10.1093/ajcn/79.4.537

Cornell, D., (2023). 9 Types of Validity in Research. Retrieved February 22, 2025, from Helpful Professor website: https://helpfulprofessor.com/types-of-validity/

Ioannidis, J. P. A. (2005). Why most published research findings are false. PLoS Medicine, 2(8), e124. https://doi.org/10.1371/journal.pmed.0020124

Kearns, C. E., Schmidt, L. A., & Glantz, S. A. (2016). Sugar industry and coronary heart disease research: A historical analysis of internal industry documents. JAMA Internal Medicine, 176(11), 1680–1685. https://doi.org/10.1001/jamainternmed.2016.5394

Kinghorn, A. D. (2002). Stevia: The genus Stevia. Taylor & Francis.

Krieger, N. (2005). Stormy weather: Race, gene expression, and the science of health disparities. American Journal of Public Health, 95(12), 2155–2160. https://doi.org/10.2105/AJPH.2005.067108”

Lemus-Mondaca, R., Vega-Gálvez, A., Zura-Bravo, L., & Ah-Hen, K. (2012). Stevia rebaudiana Bertoni, source of a high-potency natural sweetener: A comprehensive review on the biochemical, nutritional, and functional aspects. Food Chemistry, 132(3), 1121–1132. https://doi.org/10.1016/j.foodchem.2011.11.140

Lexchin, J., Bero, L. A., Djulbegovic, B., & Clark, O. (2003). Pharmaceutical industry sponsorship and research outcome and quality: Systematic review. BMJ, 326(7400), 1167–1170. https://doi.org/10.1136/bmj.326.7400.1167

Paul, J. R. (1971). A history of poliomyelitis. Yale University Press.

Proctor, R. N. (2012). Golden holocaust: Origins of the cigarette catastrophe and the case for abolition. University of California Press.

Systems thinking. (2021). Retrieved February 22, 2025, from Who.int website: https://ahpsr.who.int/what-we-do/thematic-areas-of-focus/systems-thinking

White, J. S. (2008). Straight talk about high-fructose corn syrup: What it is and what it ain’t. The American Journal of Clinical Nutrition, 88(6), 1716S–1721S. https://doi.org/10.1093/ajcn/88.6.1716S